Evaluating tech in healthcare: measuring what matters

What I learned from a podcast episode with a leading expert on evaluating public health and digital health interventions in Mozambique and Uganda

Which wheels actually help this machine move forward? Which way is forward? Where is this? Are there some other cogs and wheels here that are doing important things? A good visual metaphor for this whole topic.

“If you just measure one main outcome and ask for instance:

‘Does [this digital intervention] improve performance in community health workers?’,

You might see a positive result in a very closed system that’s highly controlled - but it doesn’t really tell you how [the intervention] works in the field… where there are so many other considerations to do with the context in which its implemented.

So for us, creating a Program Theory and a Logic Model to understand if as well as how/why the intervention worked was an incredibly important first step.”.

Dr Shay Soremekun, LSHTM

Answering the So What? question

Evals!

They’re (rightly) all the rage right now. Performance, failure modes of LLMs, benchmarking.

Performance is important, but ultimately we need to observe and evaluate what also changed in the world. So if you care about robustly proving real value and clinical outcomes, read on.

I’ve spoken to Stephen and Smisha (links in the resources section at the end) on the podcast about how Clinical Evaluation of AI is better thought of at a system level, rather than the nice, linear story: “We implement a tool and we saw a nice number go in the direction we wanted it to. Yay.”

Except anyone who has actually done this in anger will give you a reaction more consistent with - arghhhh.

What makes this hard is that when we pay attention to the whole system, we realise that our nice shiny tool is actually a small (but we hope mighty) cog in a complex wheel. In healthcare this is a complex, messy adaptive system. Stuff works or doesn’t work based on a whole bunch of other factors - many outside our direct control.

Donors, investors, buyers, sales people, marketers. They all want that nice, clean, golden metric that helps justify all the great effort and money. We did a study, methods are solid. Primary and secondary endpoints defined. Tidy.

It worked in that setting, but then when we tried this again in a different place, it just didn’t work.

Not everything that can be counted matters

Not everything that matters can be counted.

probably Einstein?

What if there’s a way to measure a deeper, more replicable and scalable type of impact? What if we, in digital health, could better visualise the system and process around the implementation?

The global and public health community, especially those doing this work in so called low-resource settings have some great methods for digital health companies to apply to their approach to evidence generation and measuring what matters.

Dr Shay Soremekun, epidemiologist and co-deputy Director of the Centre for Evaluation at the London School of Hygiene and Tropical Medicine shared with me her experience using Programme Theory to evaluate not just the digital health aspects, but also process and impact evaluation to really get to the heart of what worked, and what drove that.

Context

Malaria, pneumonia and diarrhoeal illness have been consistently the biggest killer of children in low resource settings. Treatment of these in rural areas is variable and this contributes to high mortality.

What they did

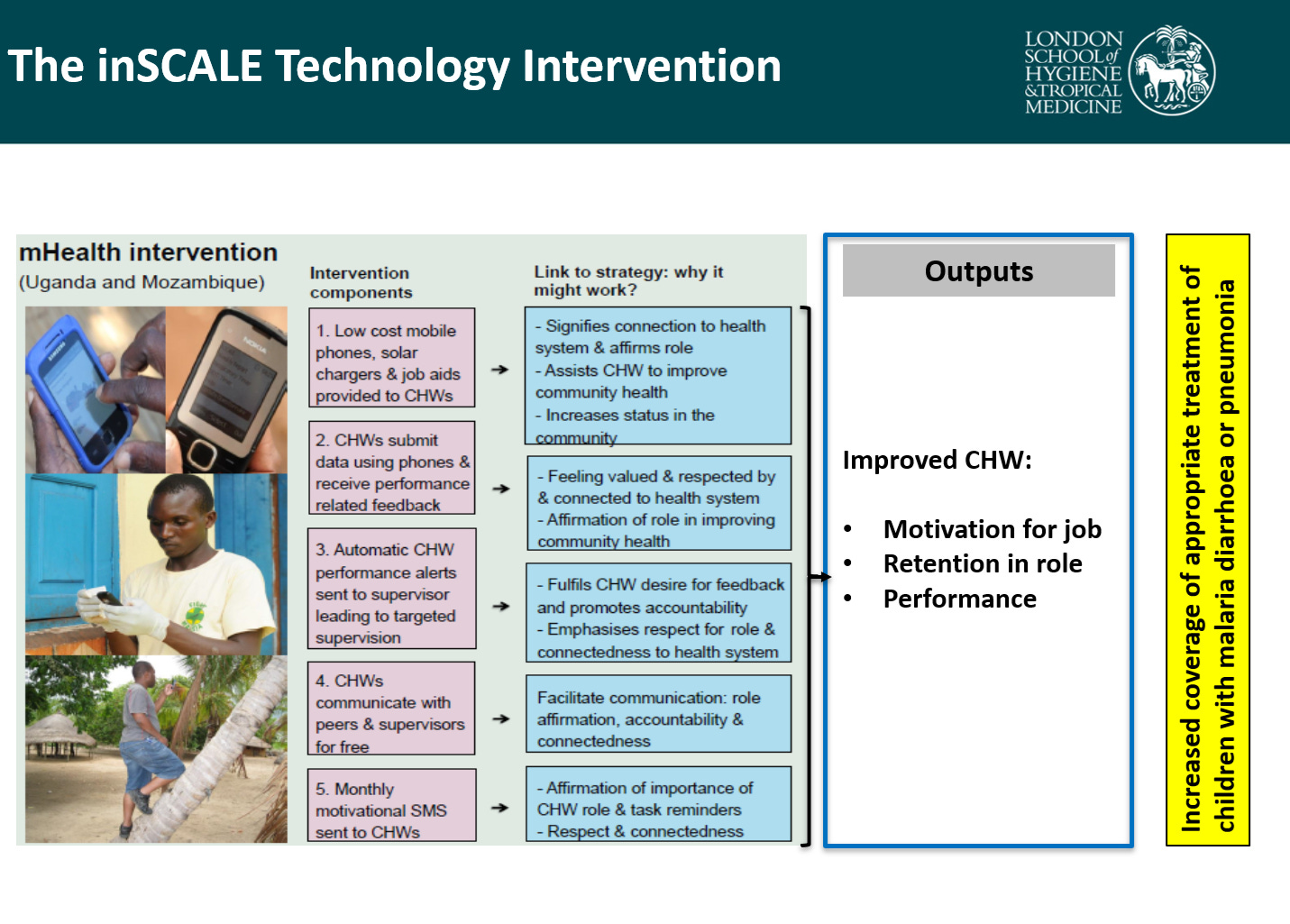

With the Malaria Consortium, the team developed an evaluation for a smartphone digital tool for use by community health workers in villages and in health facilities in Mozambique and Uganda intended to improve outcomes for children with malaria, diarrhoea and pneumonia.

They used Programme Theory and Logic Model methods to visualise the system and worked with local communities to understand all the intermediate steps that would lead to the eventual intended outcome, mainly targeted towards helping community health workers feel supported with clinical decision support and ensuring appropriate treatment, and connection with peers and seniors. They also received other tools to help with electrical and internet connectivity. It is clearly great that they saw that this improved coverage of appropriate treatment improving by 26% (refs below), but that’s only part of the picture.

To understand why it improved care, if it did, was as important, if not more important, than understanding that it did.

Because that will help us to understand how we need to potentially modify or adapt the intervention either in the same place or in future places to be able to achieve the same success.

Dr Shay Soremekun

Incidentally, I also think that the same applies for the ‘negative’.

- i.e to understand why it did not work, if it didn’t, to be able to learn and improve effectively.

Top 3 takeaways

Think in systems: When you are implementing a digital health tool and trying to think about what to measure, think about the layers around your tech, especially people and processes. In this case, they were focused on Community health worker performance, but also saw contribution lines with motivation, retention. And ALL OF THAT was part of whether than would move the needle on the big long term goal : improvement in the number of children who got appropriate treatment for malaria, pneumonia and diarrheal illness.

A visual of the Theory of Change that was used by the LSHTM team in Mozambique and Uganda. Credit: The Malaria Consortium, LSHTM, and UCL inSCALE Team

There’s lots of clever people around the world working on their own versions of this. In my consulting work, I often adapt versions of these for Evidence Generation strategy, and it’s always a lightbulb moment for the teams I am working with to visualise that. The above is the real, academic, tried and tested basis of that.

Observe what other levers of success or failure are critical outside of your primary and secondary endpoint.

If the team hadn’t done process evaluation part, they would have only seen the aggregate improvement. But they were able to pinpoint the biggest lever point as the facility workers who had the biggest upside. It meant that the future scale up work captured the importance of this aspect.

Make it make sense for people

We of course need to go for statistical and clinical significance.

But we also need to prioritise local cultural and societal significance. That’s what being people centred is. That’s what being locally and contextually led is, and also what will help decolonize the anti-patterns in global health.

This quote from Shay summed it up :

Taking that co-production approach, making sure.. that the right people are leading it.. on the ground in the countries in which the interventions are implemented. That alone and using that to ensure that your evaluations are equitable has such a massive impact, such a significant impact on not just the quality of your evaluation, but also on the chances of you seeing the success in the thing that you’re evaluating, and then lastly on the chances of it being adopted and taken up.

Closing thoughts

Clinical outcomes are hard to move the needle on, especially for digital and AI based tools. If we are to get traction on this credibly, we need to lean in and do the work on measuring what matters and seeing how our tools live, breathe and interact in systems, and then we need to iterate and improve fast. We can’t forget the human and process aspects - make it make sense for the individual.

All of this means working in partnership with patients and healthcare workers and actually listening. How are we also capturing value for them? How are we enabling them to get towards those outcomes. Can you measure and observe these?

These are the questions worth answering.

Listen to the full episode with Shay.

Resources

I’ve just started an exciting stint as Guest Editor on an exciting special collection at Nature npj digital medicine. Got insights to share on implementation in low resource settings? Submissions are now live!

If you’re into LLM evals and benchmarking for LMIC context thats also human centered check out this work led by former guest Bilal Mateen at PATH.

If this topic is your jam, here’s some other episodes that can help you down this rabbit hole:

Episode 15 : Implementation 101 and how to Fail well with Caroline Perrin

Episode 12 : Health First, Innovation second, with Smisha Aggarwal

Episode 5 : What is the right approach for regulation and evaluation of digital health technologies? with Prof Stephen Gilbert

References

inSCALE papers:

https://journals.plos.org/digitalhealth/article?id=10.1371/journal.pdig.0000235

https://journals.plos.org/digitalhealth/article?id=10.1371/journal.pdig.0000217

Link for upSCALE (roll-out of project):

https://www.malariaconsortium.org/projects/upscale

https://www.malariaconsortium.org/resources/integrating-upscale-into-the-ministry-of-health

Study protocol for Uganda and Mozambique:

https://trialsjournal.biomedcentral.com/articles/10.1186/s13063-015-0657-6

Chilisa Bagele – one of the most foremost voices on decolonial evaluation methodology so a good start for anyone interested in the upcoming talk at LSHTM):

Centre for Evaluation Website: https://www.lshtm.ac.uk/research/centres/centre-evaluation

About Dr Shay Soremekun

Dr Shay Soremekun is an epidemiologist and co-deputy Director of the Centre for Evaluation at the London School of Hygiene and Tropical Medicine.

Her research in child and adolescent health and development covers trials of low-cost disease prevention programmes, and identification of risk factors and mitigation strategies for poor developmental and economic outcomes in this group. She is a member of the UK Government Evaluation and Trial Advice Panel (ETAP), and sits on the steering committee for the John Snow Society. She lectures at postgraduate level on topics of evaluation, epidemiology and public health, and has developed and organises an MSc module in Study Design.

LSHTM page: https://www.lshtm.ac.uk/aboutus/people/soremekun.shay

About Shubs

Hey, I’m Shubs. I am a Primary Care physician by background, and host of the Global Perspectives on Digital health podcast, bringing to life what it takes to create impact with AI and digital health to underserved communities.

I help digital health companies and investors integrate pragmatic clinical leadership that helps weld together business goals and real healthcare impact.

I’ve worked as a senior clinician in both high and low resource settings, led tech teams for a scaling digital health company serving millions of patients globally and done the day to day work on product quality, regulatory requirements and evidence generation. At a policy level, I’ve led work creating clinical evaluation frameworks with the World Health Organization and International Telecommunications Union.

I am Guest Editor at Nature’s npj digital medicine special collection focused on low resource settings.

Need help embedding clinical leadership and quality whilst still succeeding commercially?

Want to increase impact toward underserved communities as a digital health company or investor? Want to bring your implementation and impact story to life?

🚀 Work with me. Get in touch on hello@shubs.me